Noisy Channel : What is Nyquist Bit Rate and Shannon Capacity

Noiseless Channel :

Nyquist Bit Rate •

For a noiseless channel, the Nyquist bit rate formula defines the theoretical maximum bit rate

• In this formula, B is the bandwidth of the channel, L is the number of signal levels used to

represent data, and Bit Rate is the bit rate in bits per second.

• According to the formula, we might think that, given a specific bandwidth, we can have any

bit rate we want by increasing the number of signal levels. Although the idea is theoretically

correct, practically there is a limit.

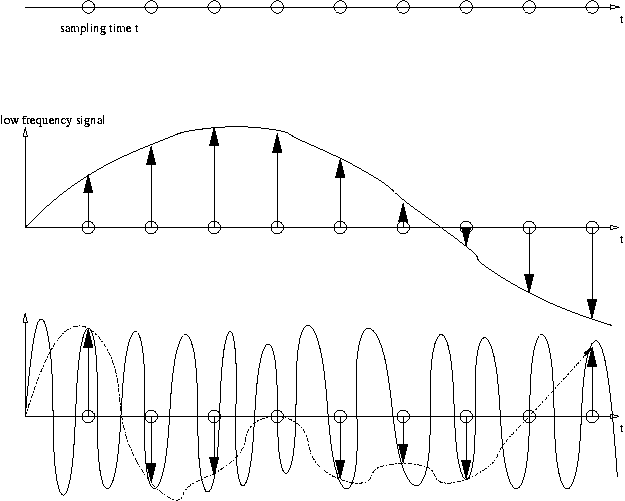

• When we increase the number of signal levels, we impose a burden on the receiver. If the

number of levels in a signal is just 2, the receiver can easily distinguish between a 0 and a 1. If

the level of a signal is 64, the receiver must be very sophisticated to distinguish between 64

different levels. In other words, increasing the levels of a signal reduces the reliability of the

system. 3 Increasing the levels of a signal may reduce the reliability of the system

Noisy Channel : Shannon Capacity

• In reality, we cannot have a noiseless channel; the channel is always noisy. • In 1944, Claude

Shannon introduced a formula, called the Shannon capacity, to determine the theoretical

highest data rate for a noisy channel:

• In this formula, bandwidth is the bandwidth of the channel, SNR is the signal-to-noise ratio,

and capacity is the capacity of the channel in bits per second.

• Note that in the Shannon formula there is no indication of the signal level, which means that

no matter how many levels we have, we cannot achieve a data rate higher than the capacity of

the channel. In other words, the formula defines a characteristic of the channel, not the

method of transmission.

Latency & Jitter

Latency is generally defined as the time it takes for a source to send a packet of data to a receiver. In simple terms, half of Ping time. This is also referred to as one way latency. Sometimes the term Round trip latency or round trip time (RTT) is also used to define latency. This is the same as ping time. Jitter is defined as the variation in the delay (or latency) of received packets. It is also referred to as ‘delay jitter’.

No comments